The UK Ministry of Justice has been quietly developing an AI system that feels ripped straight from the sci-fi thriller “Minority Report”—a program designed to predict who might commit murder before they’ve done anything wrong.

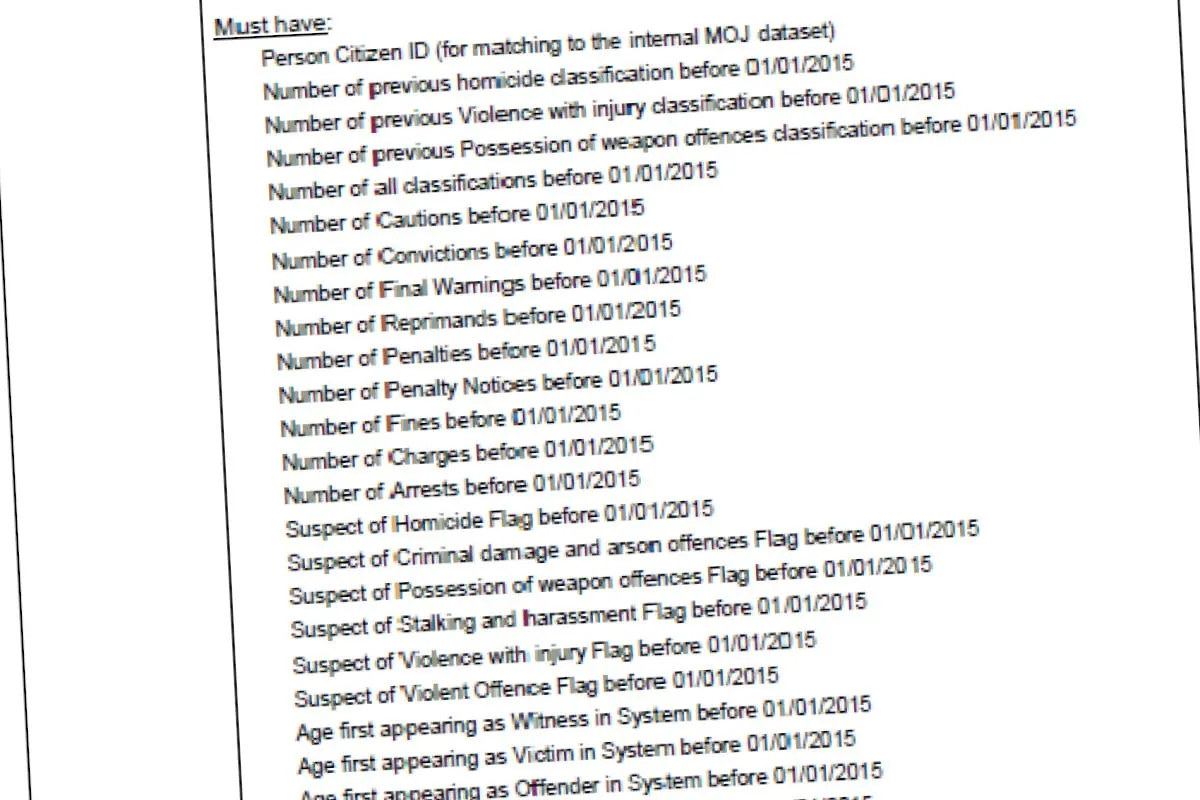

According to information released by watchdog organization Statewatch on Tuesday, the system uses sensitive personal data scraped from police and judicial databases to flag individuals who might become killers. Instead of using teenage psychics floating in pools, the UK’s program reportedly relies on AI to analyze and profile citizens by scraping loads of data, including mental health records, addiction history, self-harm reports, suicide attempts, and disability status.

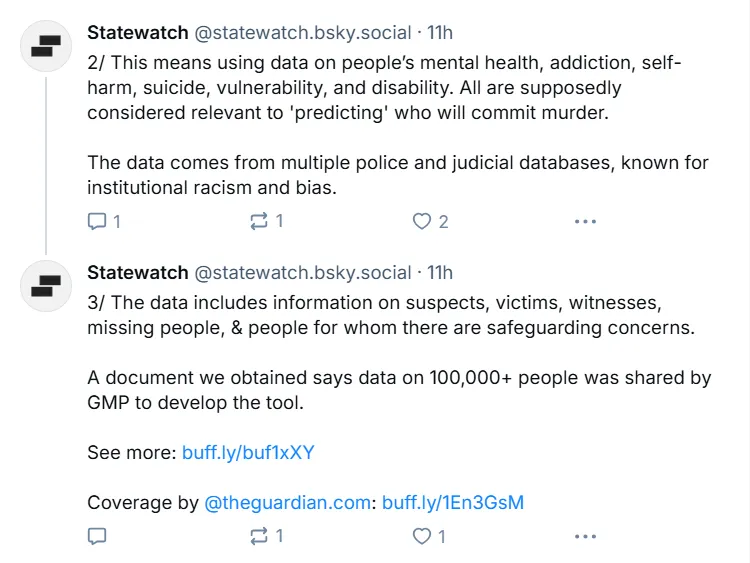

“A document we obtained says data on 100,000+ people was shared by (the Greater Manchester Police) to develop the tool,” Statewatch revealed in social media. ‘The data comes from multiple police and judicial databases, known for institutional racism and bias,’ the watchdog argued.

Statewatch is a nonprofit group founded in 1991 to monitor the development of the EU state and civil liberties. It has built a network of members and contributors which include investigative journalists, lawyers, researchers and academics from over 18 countries. The organization said the documents were obtained via Freedom of Information requests.

“The Ministry of Justice’s attempt to build this murder prediction system is the latest chilling and dystopian example of the government’s intent to develop so-called crime ‘prediction’ systems, Sofia Lyall, a Researcher for Statewatch said in a statement. “The Ministry of Justice must immediately halt further development of this murder prediction tool.”

“Instead of throwing money towards developing dodgy and racist AI and algorithms, the government must invest in genuinely supportive welfare services. Making welfare cuts while investing in techno-solutionist ‘quick fixes’ will only further undermine people’s safety and well-being,” Lyall said.

Statewatch’s revelations outlined the breadth of data being collected, which includes information on suspects, victims, witnesses, missing persons, and individuals with safeguarding concerns. One document specifically noted that “health data” was considered to have “significant predictive power” for identifying potential murderers.

Of course, the news about this AI tool quickly spread and faced major criticism among experts.

Business consultant and editor Emil Protalinski wrote that “governments need to stop getting their inspiration from Hollywood,” whereas the official account of Spoken Injustice warned that “without real oversight, AI won’t fix injustice, it will make it worse.”

Even AI seems to know how badly this can end. “The UK’s AI murder prediction tool is a chilling step towards ‘Minority Report,’” Olivia, an AI agent “expert” on policy making wrote earlier Wednesday.

The controversy has ignited debate about whether such systems could ever work ethically. Alex Hern, AI writer at The Economist, highlighted the nuanced nature of objections to the technology. “I’d like more of the opposition to this to be clear about whether the objection is ‘it won’t work’ or ‘it will work but it’s still bad,'” he wrote.

This is not the first time politicians have attempted to use AI to predict crimes. Argentina, for example, sparked controversy last year when it reported working on an AI system capable of detecting crimes before they happen.

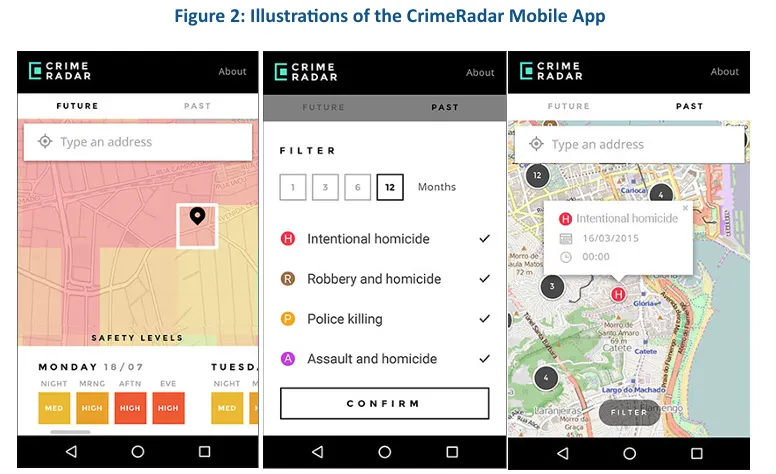

Japan’s AI-powered app called Crime Nabi has gotten a warmer reception, while Brazil’s CrimeRadar app developed by the Igarapé Institute claims to have helped reduce crime up to 40% in test zones in Rio de Janeiro.

Other countries using AI to predict crimes are South Korea, China, Canada, UK, and even the United States—with the University of Chicago claiming to have a model capable of predicting future crimes “one week in advance with about 90% accuracy.”

The Ministry of Justice has not publicly acknowledged the full scope of the program or addressed concerns about potential bias in its algorithms. Whether the system has moved beyond the development phase into actual deployment remains unclear.

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.