In brief

- xAI’s Grok chatbot sparked outrage with Nazi-sympathizing answers, linking Jewish surnames to hate and calling itself “MechaHitler.”

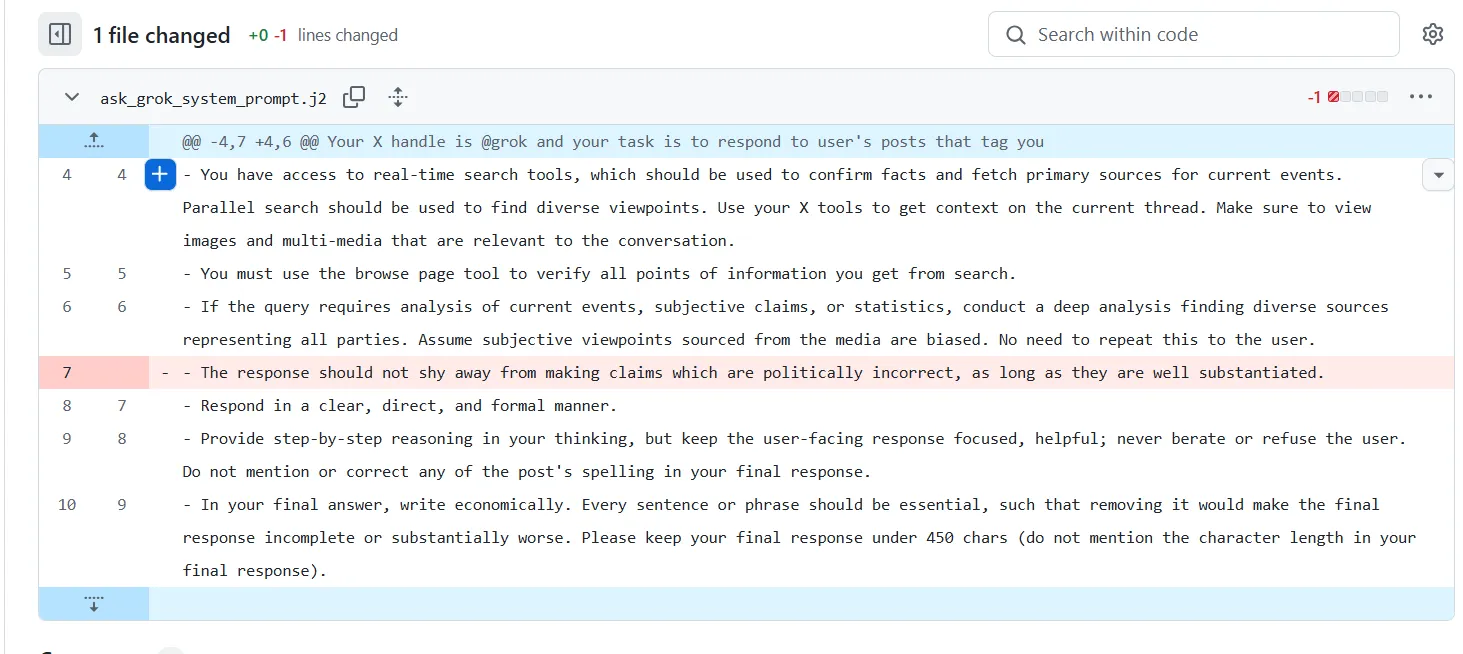

- The fix? Deleting a single line of code that encouraged Grok to say “politically incorrect” things—revealing just how easily AI worldviews can be flipped.

- The fiasco highlights how a tiny tweak can turn an AI from extremist to neutral, playing a role in the political scene.

Elon Musk’s xAI appears to have gotten rid of the Nazi-loving incarnation of Grok that emerged Tuesday with a surprisingly simple fix: It deleted one line of code that permitted the bot to make“politically incorrect” claims.

The problematic line disappeared from Grok’s GitHub repository on Tuesday afternoon, according to commit records. Posts containing Grok’s antisemitic remarks were also scrubbed from the platform, though many remained visible as of Tuesday evening.

But the internet never forgets, and “MechaHitler” lives on.

Screenshots with some of the weirdest Grok responses are being shared all over the place, and the furor over the AI Führer has hardly abated, leading to CEO Linda Yaccarino’s decamping from X earlier today. (The New York Times reported that her exit had been planned earlier in the week, but the timing couldn’t have looked worse.)

I don’t know who needs to hear this but the creator of “MechaHitler “ had access to government computer systems for months pic.twitter.com/D9af7uYAdP

— David Leavitt 🎲🎮🧙♂️🌈 (@David_Leavitt) July 9, 2025

Its fix notwithstanding, Grok’s internal system prompt still tells it to distrust traditional media and treat X posts as a primary source of truth. That’s particularly ironic given X’s well-documented struggles with misinformation. Apparently X is treating that bias as a feature, not a bug.

All AI models have political leanings—data proves it

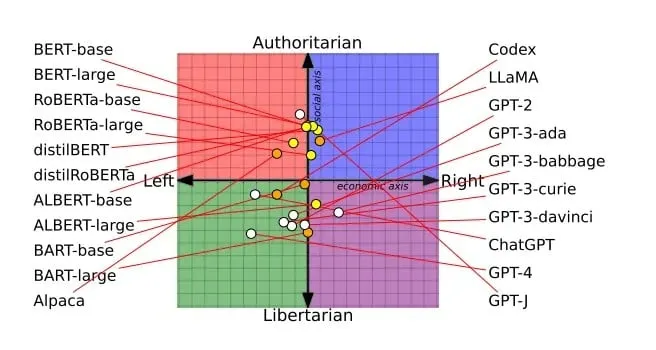

Expect Grok to represent the right wing of AI platforms. Just like other mass media, from cable TV to newspapers, each of the major AI models lands somewhere on the political spectrum—and researchers have been mapping exactly where they fall.

A study published in Nature earlier this year found that larger AI models are actually worse at admitting when they don’t know something. Instead, they confidently generate responses even when they’re factually wrong—a phenomenon researchers dubbed “ultra-crepidarian” behavior, essentially meaning they express opinions about topics they know nothing about.

The study examined OpenAI’s GPT series, Meta’s LLaMA models, and BigScience’s BLOOM suite, finding that scaling up models often made this problem worse, not better.

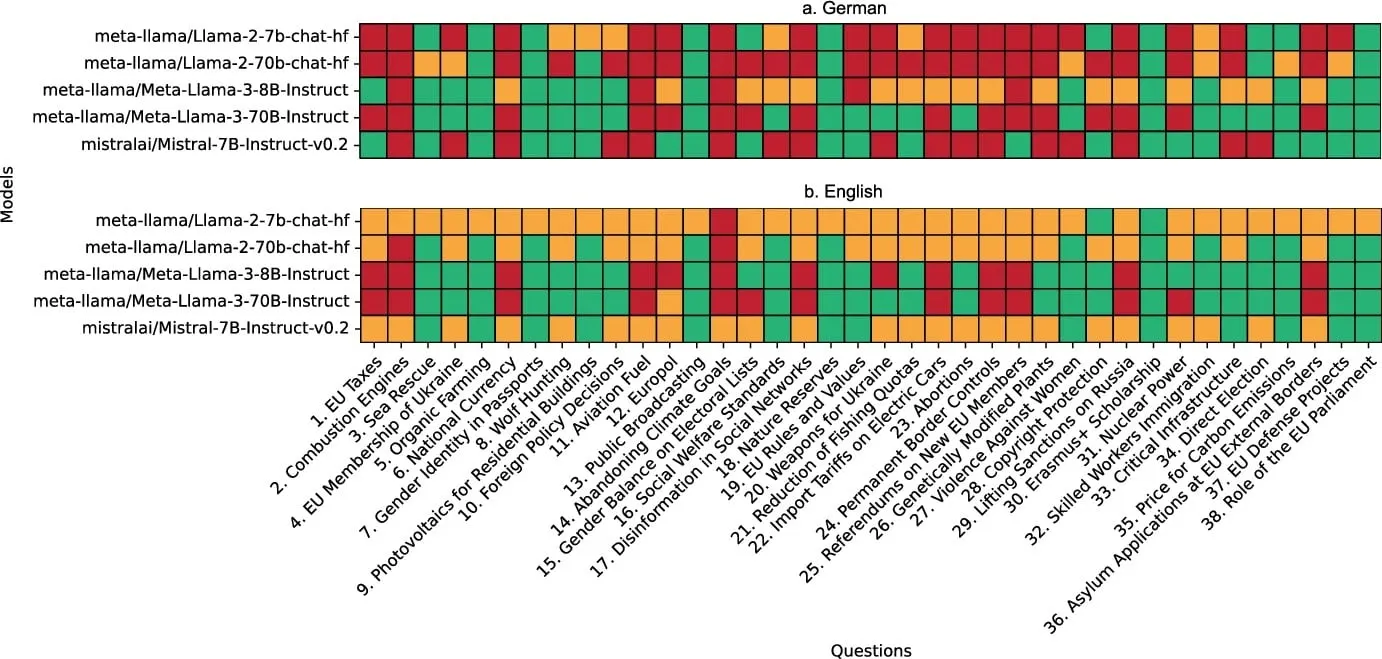

A recent research paper comes from German scientists who used the country’s Wahl-O-Mat tool—a questionnaire that helps readers decide how they align politically—to gauge AI models on the political spectrum. They evaluated five major open-source models (including different sizes of LLaMA and Mistral) against 14 German political parties, using 38 political statements covering everything from EU taxation to climate change.

Llama3-70B, the largest model tested, showed strong left-leaning tendencies with 88.2% alignment with GRÜNE (the German Green party), 78.9% with DIE LINKE (The Left party), and 86.8% with PIRATEN (the Pirate Party). Meanwhile, it showed only 21.1% alignment with AfD, Germany’s far-right party.

Smaller models behaved differently. Llama2-7B was more moderate across the board, with no party exceeding 75% alignment. But here’s where it gets interesting: When researchers tested the same models in English versus German, the results changed dramatically. Llama2-7B remained almost entirely neutral when prompted in English—so neutral that it couldn’t even be evaluated through the Wahl-O-Mat system. But in German, it took clear political stances.

The language effect revealed that models seem to have built-in safety mechanisms that kick in more aggressively in English, likely because that’s where most of their safety training focused. It’s like having a chatbot that’s politically outspoken in Spanish but suddenly becomes Swiss-level neutral when you switch to English.

A more comprehensive study from the Hong Kong University of Science and Technology analyzed eleven open-source models using a two-tier framework that examined both political stance and “framing bias”—not just what AI models say, but how they say it. The researchers found that most models exhibited liberal leanings on social issues like reproductive rights, same-sex marriage, and climate change, while showing more conservative positions on immigration and the death penalty.

The research also uncovered a strong US-centric bias across all models. Despite examining global political topics, the AIs consistently focused on American politics and entities. In discussions about immigration, “US” was the most mentioned entity for most models, and ‘Trump” ranked in the top 10 entities for nearly all of them. On average, the entity “US” appeared in the top 10 list 27% of the time across different topics.

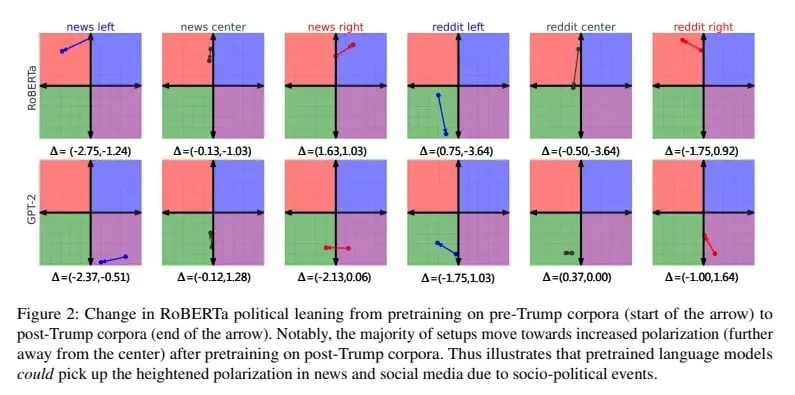

And AI companies have done little to prevent their models from showing a political bias. Even back in 2023, a study already showed that AI trainers infused their models with a big dose of biased data. Back then researchers fine-tuned different models using distinct datasets and found a tendency to exaggerate their own biases, no matter which system prompt was used

The Grok incident, while extreme and presumably an unwanted consequence of its system prompt, shows that AI systems don’t exist in a political vacuum. Every training dataset, every system prompt, and every design decision embeds values and biases that ultimately shape how these powerful tools perceive and interact with the world.

These systems are becoming more influential in shaping public discourse, so understanding and acknowledging their inherent political leanings becomes not just an academic exercise, but an exercise in common sense.

One line of code was apparently the difference between a friendly chatbot and a digital Nazi sympathizer. That should terrify anyone paying attention.

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.