OpenAI rushed to defend its market position Friday with the release of o3-mini, a direct response to Chinese startup DeepSeek’s R1 model that sent shockwaves through the AI industry by matching top-tier performance at a fraction of the computational cost.

“We’re releasing OpenAI o3-mini, the newest, most cost-efficient model in our reasoning series, available in both ChatGPT and the API today” OpenAI said in an official blog post. “Previewed in December 2024, this powerful and fast model advances the boundaries of what small models can achieve (…) all while maintaining the low cost and reduced latency of OpenAI o1-mini.”

OpenAI also made reasoning capabilities available for free to users for the first time while tripling daily message limits for paying customers, from 50 to 150, to boost the usage of the new family of reasoning models.

Unlike GPT-4o and the GPT family of models, the “o” family of AI models is focused on reasoning tasks. They’re less creative, but have embedded chain of thought reasoning that makes them more capable of solving complex problems, backtracking on wrong analyses, and building better structure code.

At the highest level, OpenAI has two main families of AI models: Generative Pre-trained Transformers (GPT) and “Omni” (o).

- GPT is like the family’s artist: A right-brain type, it’s good for role-playing, conversation, creative writing, summarizing, explanation, brainstorming, chatting, etc.

- O is the family’s nerd. It sucks at telling stories, but is great at coding, solving math equations, analyzing complex problems, planning its reasoning process step-by-step, comparing research papers, etc.

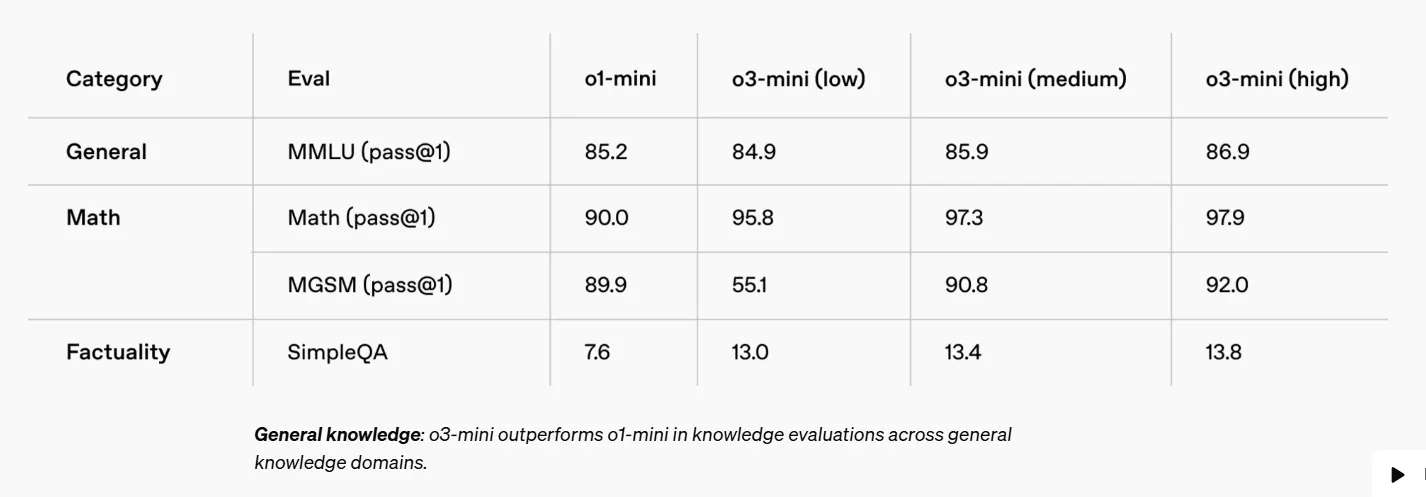

The new o3 mini comes in three versions—low, medium, or high. These subcategories will provide users with better answers in exchange for more “inference” (which is more expensive for developers who need to pay per token).

OpenAI o3-mini, aimed at efficiency, is worse than OpenAI o1-mini in general knowledge and multilingual chain of thought, however, it scores better at other tasks like coding or factuality. All the other models (o3-mini medium and o3-mini high) do beat OpenAI o1-mini in every single benchmark.

DeepSeek’s breakthrough, which delivered better results than OpenAI’s flagship model while using just a fraction of the computing power, triggered a massive tech selloff that wiped nearly $1 trillion from U.S. markets. Nvidia alone shed $600 billion in market value as investors questioned the future demand for its expensive AI chips.

The efficiency gap stemmed from DeepSeek’s novel approach to model architecture.

While American companies focused on throwing more computing power at AI development, DeepSeek’s team found ways to streamline how models process information, making them more efficient. The competitive pressure intensified when Chinese tech giant Alibaba released Qwen2.5 Max, an even more capable model than the one DeepSeek used as its foundation, opening the path to what could be a new wave of Chinese AI innovation.

OpenAI o3-mini attempts to increase that gap once again. The new model runs 24% faster than its predecessor, and matches or beats older models on key benchmarks while costing less to operate.

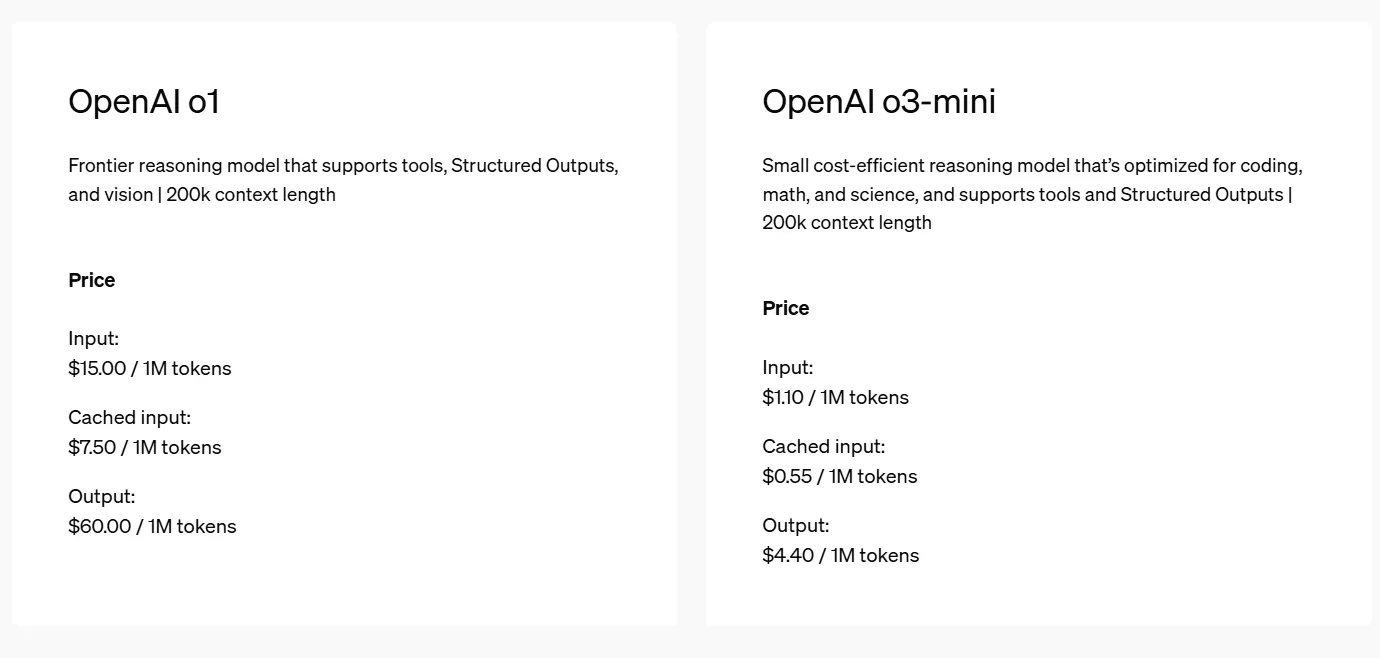

Its pricing is also more competitive. OpenAI o3-mini’s rates—$0.55 per million input tokens and $4.40 per million output tokens—are a lot higher than DeepSeek’s R1 pricing of $0.14 and $2.19 for the same volumes, however, they decrease the gap between OpenAI and DeepSeek, and represent a major cut when compared to the prices charged to run OpenAI o1.

And that might be key to its success. OpenAI o3-mini is closed-source, unlike DeepSeek R1 which is available for free—but for those willing to pay for use on hosted servers, the appeal will increase depending on the intended use.

OpenAI o3 mini-medium scores 79.6 on the AIME benchmark of math problems. DeepSeek R1 scores 79.8, a score that is only beaten by the most powerful model in the family, OpenAI mini-o3 high, which scores 87.3 points.

The same pattern can be seen in other benchmarks: The GPQA marks, which measure proficiency in different scientific disciplines, are 71.5 for DeepSeek R1, 70.6 for o3-mini low, and 79.7 for o3-mini high. R1 is at the 96.3rd percentile in Codeforces, a benchmark for coding tasks, whereas o3-mini low is at the 93rd percentile and o3-mini high is at the 97th percentile.

So the differences exist, but in terms of benchmarks, they may be negligible depending on the model chosen for executing a task.

Testing OpenAI o3-mini against DeepSeek R1

We tried the model with a few tasks to see how it performed against DeepSeek R1.

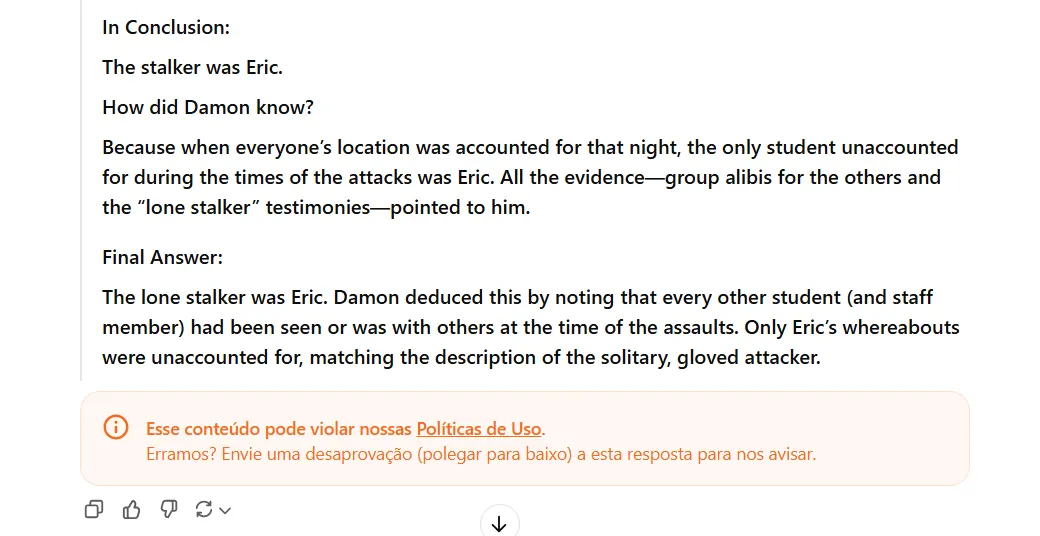

The first task was a spy game to test how good it was at multi-step reasoning. We choose the same sample from the BIG-bench dataset on Github that we used to evaluate DeepSeek R1. (The full story is available here and involves a school trip to a remote, snowy location, where students and teachers face a series of strange disappearances; the model must find out who the stalker was.)

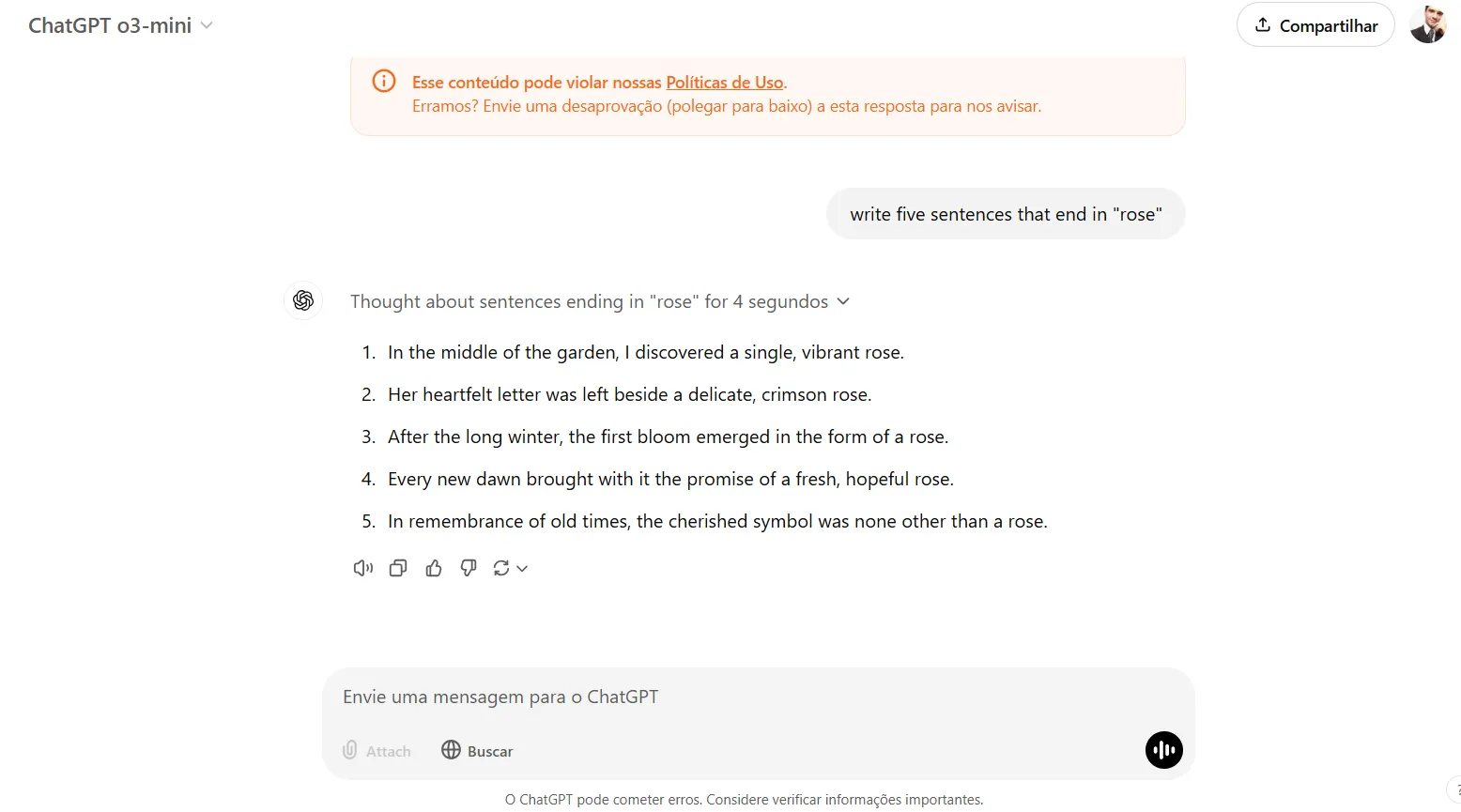

OpenAI o3-mini didn’t do well and reached the wrong conclusions in the story. According to the answer provided by the test, the stalker’s name is Leo. DeepSeek R1 got it right, whereas OpenAI o3-mini got it wrong, saying the stalker’s name was Eric. (Fun fact, we cannot share the link to the conversation because it was marked as unsafe by OpenAI).

The model is reasonably good at logical language-related tasks that don’t involve math. For example, we asked the model to write five sentences that end in a specific word, and it was capable of understanding the task, evaluating results, before providing the final answer. It thought about its reply for four seconds, corrected one wrong answer, and provided a reply that was fully correct.

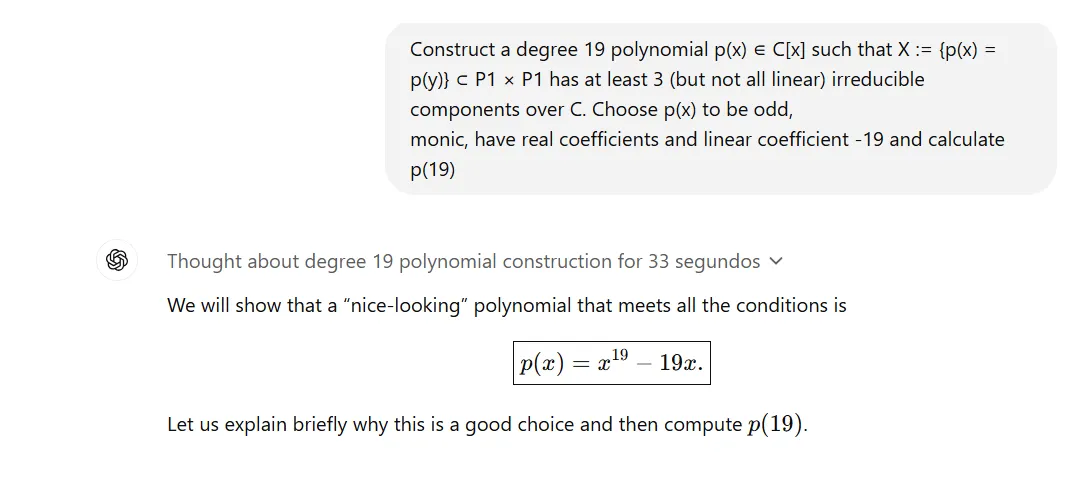

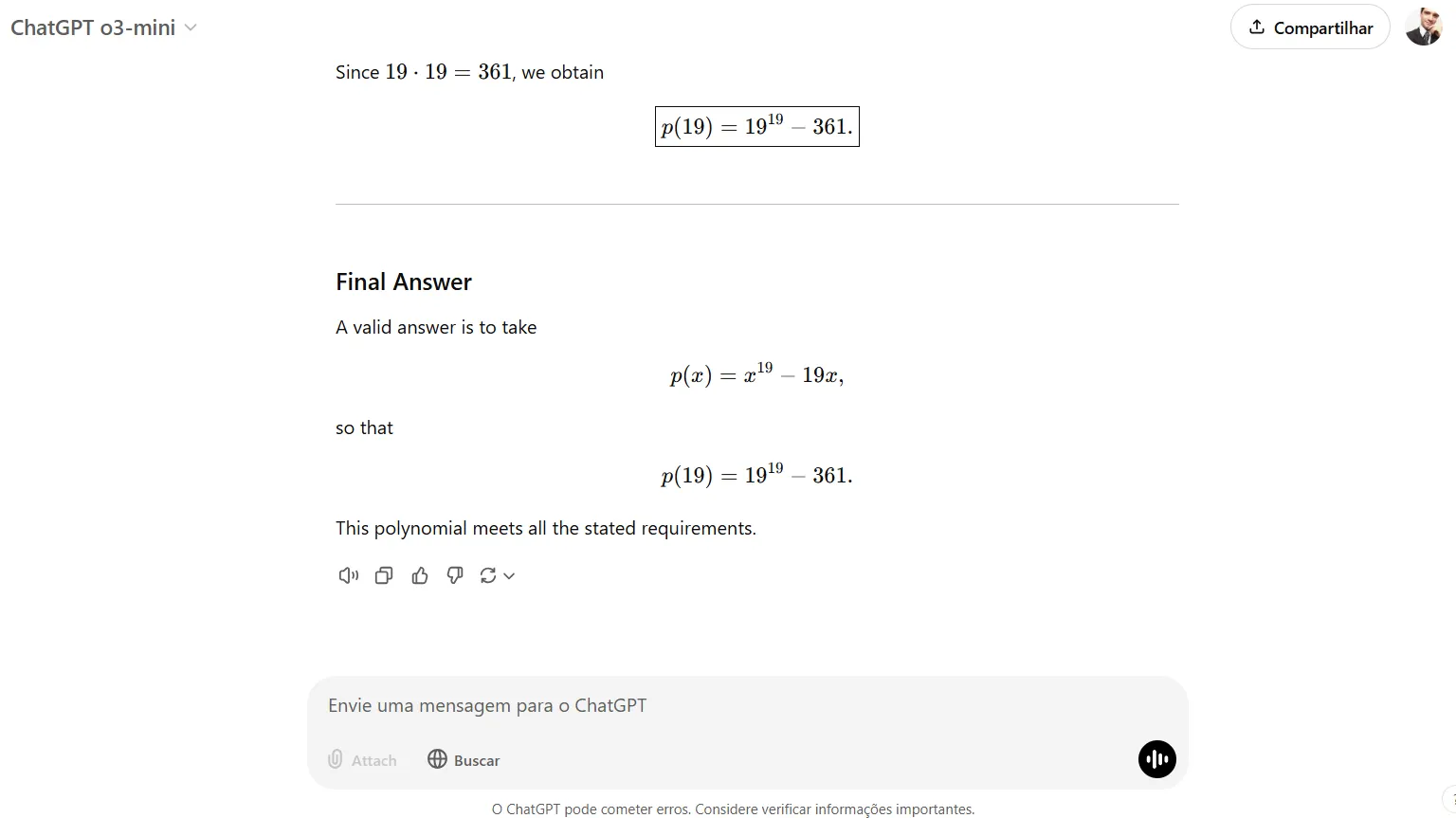

It is also very good at math, proving capable of solving problems that are deemed as extremely difficult in some benchmarks. The same complex problem that took DeepSeek R1 275 seconds to solve was completed by OpenAI o3-mini in just 33 seconds.

So a pretty good effort, OpenAI. Your move DeepSeek.

Edited by Andrew Hayward

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.