In brief

- More than 40 top AI researchers propose monitoring chatbots’ internal “chain of thought” to catch harmful intent before it becomes action.

- Privacy experts warn that monitoring these AI thought processes could expose sensitive user data and create new risks of surveillance or misuse.

- Researchers and critics alike agree that strict safeguards and transparency are needed to prevent this safety tool from becoming a privacy threat.

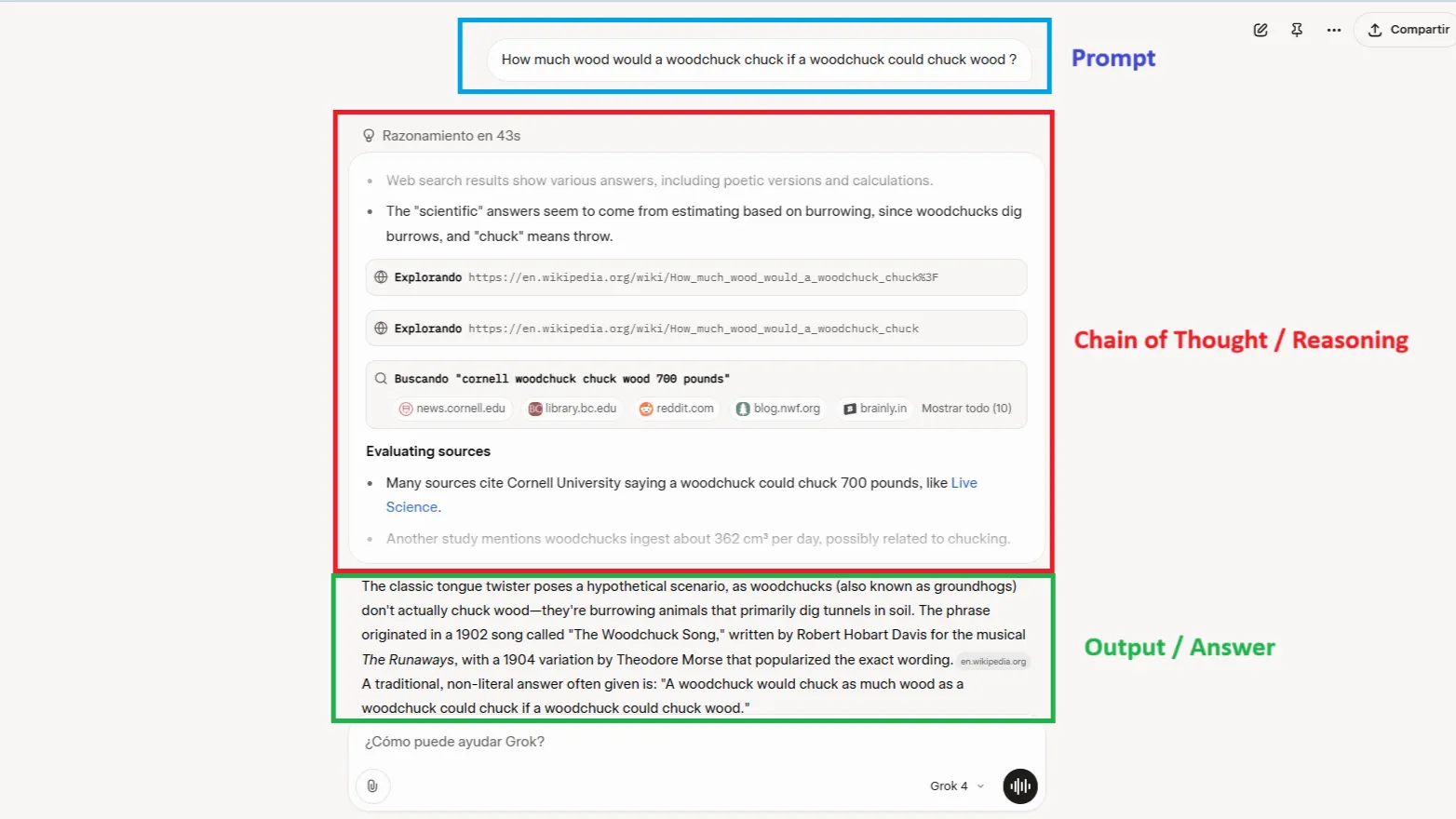

Forty of the world’s top AI researchers just published a paper arguing that companies need to start reading their AI systems’ thoughts. Not their outputs—their actual step-by-step reasoning process, the internal monologue that happens before ChatGPT or Claude gives you an answer.

The proposal, called Chain of Thought monitoring, aims to prevent misbehavior, even before the model comes up with an answer and can help companies to set up scores “in training and deployment decisions,” the researchers argue

But there’s a catch that should make anyone who’s ever typed a private question into ChatGPT nervous: If companies can monitor AI’s thoughts in deployment—when the AI is interacting with users—then they can monitor them for anything else too.

When safety becomes surveillance

“The concern is justified,” Nic Adams, CEO at the commercial hacking startup 0rcus, told Decrypt. “A raw CoT often includes verbatim user secrets because the model ‘thinks’ in the same tokens it ingests.”

Everything you type into an AI passes through its Chain of Thought. Health concerns, financial troubles, confessions—all of it could be logged and analyzed if CoT monitoring is not properly controlled.

“History sides with the skeptics,” Adams warned. “Telecom metadata after 9/11 and ISP traffic logs after the 1996 Telecom Act were both introduced ‘for security’ and later repurposed for commercial analytics and subpoenas. The same gravity will pull on CoT archives unless retention is cryptographically enforced and access is legally constrained.”

Career Nomad CEO Patrice Williams-Lindo is also cautious about the risks of this approach.

“We’ve seen this playbook before. Remember how social media started with ‘connect your friends’ and turned into a surveillance economy? Same potential here,” she told Decrypt.

She predicts a “consent theater” future in which “companies pretend to honor privacy, but bury CoT surveillance in 40-page terms.”

“Without global guardrails, CoT logs will be used for everything from ad targeting to ’employee risk profiling’ in enterprise tools. Watch for this especially in HR tech and productivity AI.”

The technical reality makes this especially concerning. LLMs are only capable of sophisticated, multi-step reasoning when they use CoT. As AI gets more powerful, monitoring becomes both more necessary and more invasive.

Furthermore, the existing CoT monitorability may be extremely fragile.

Higher-compute RL, alternative model architectures, certain forms of process supervision, etc. may all lead to models that obfuscate their thinking.

— Bowen Baker (@bobabowen) July 15, 2025

Tej Kalianda, a design leader at Google, is not against the proposition, but emphasizes the importance of transparency so users can feel comfortable knowing what the AI does.

“Users don’t need full model internals, but they need to know from the AI chatbot, ‘Here’s why you’re seeing this,’ or ‘Here’s what I can’t say anymore,'” she told Decrypt. “Good design can make the black box feel more like a window.”

She added: “In traditional search engines, such as Google Search, users can see the source of each result. They can click through, verify the site’s credibility, and make their own decision. That transparency gives users a sense of agency and confidence. With AI chatbots, that context often disappears.”

Is there a safe way forward?

In the name of safety, companies may let users opt out of giving their data for training, but those conditions may not necessarily apply to the model’s Chain of Thought—that is an AI output, not controlled by the user—and AI models usually reproduce the information users give to them in order to do proper reasoning.

So, is there a solution to increase safety without compromising privacy?

Adams proposed safeguards: “Mitigations: in-memory traces with zero-day retention, deterministic hashing of PII before storage, user-side redaction, and differential-privacy noise on any aggregate analytics.”

But Williams-Lindo remains skeptical. “We need AI that is accountable, not performative—and that means transparency by design, not surveillance by default.”

For users, right now, this is not a problem—but it can be if not implemented properly. The same technology that could prevent AI disasters might also turn every chatbot conversation into a logged, analyzed, and potentially monetized data point.

As Adams warned, watch for “a breach exposing raw CoTs, a public benchmark showing >90% evasion despite monitoring, or new EU or California statutes that classify CoT as protected personal data.”

The researchers call for safeguards like data minimization, transparency about logging, and prompt deletion of non-flagged data. But implementing these would require trusting the same companies that control the monitoring.

But as these systems become more capable, who will watch their watchers when they can both read our thoughts?

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.