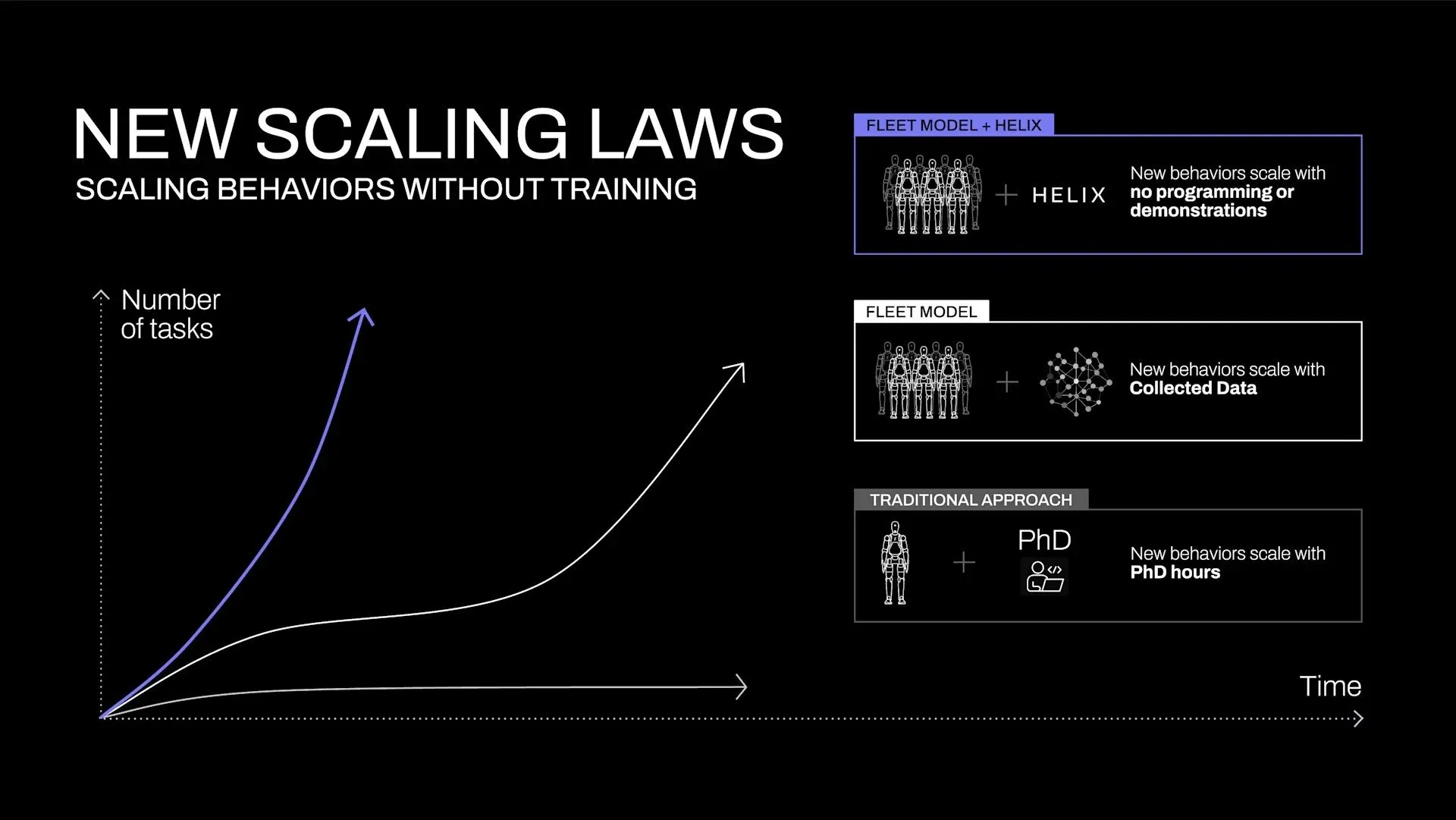

Figure AI finally revealed on Thursday the “major breakthrough” that led the buzzy robotics startup to break ties with one of its investors, OpenAI: A novel dual-system AI architecture that allows robots to interpret natural language commands and manipulate objects they’ve never seen before—without needing specific pre-training or programing for each one.

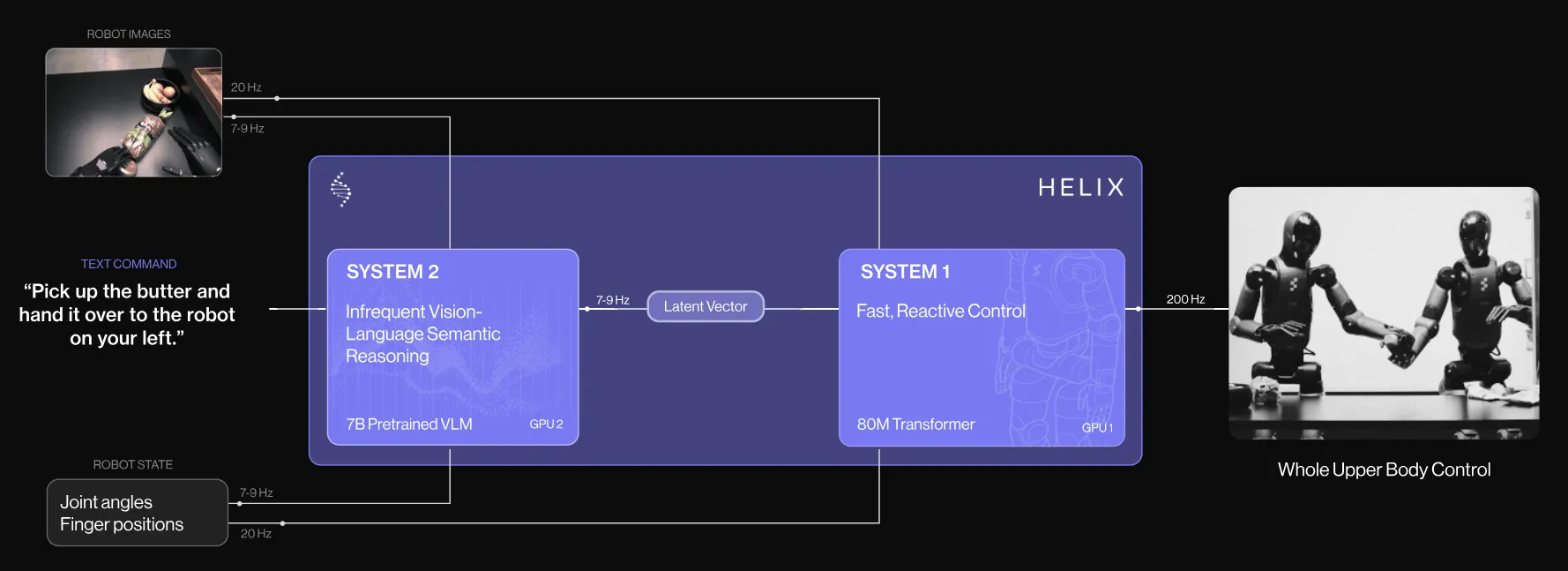

Unlike conventional robots that require extensive programming or demonstrations for each new task, Helix combines a high-level reasoning system with real-time motor control. Its two systems effectively bridge the gap between semantic understanding (knowing what objects are) and action or motor control (knowing how to manipulate those objects).

This will make it possible for robots to become more capable over time without having to update their systems or train on new data. To demonstrate how it works, the company released a video showing two Figure robots working together to put away groceries, with one robot handing items to another that places them in drawers and refrigerators.

Figure claimed that neither robot knew about the items they were dealing with, yet they were capable of identifying which ones should go in a refrigerator and which ones are supposed to be stored dry.

“Helix can generalize to any household item,” Adcock tweeted. “Like a human, Helix understands speech, reasons through problems, and can grasp any object—all without needing training or code.”

How the magic works

To achieve this generalization capability, the Sunnyvale, California-based startup also developed what it called a Vision-Language-Action (VLA) model that unifies perception, language understanding, and learned control, which is what made its models capable of generalizing.

This model, Figure claims, marks several firsts in robotics. It outputs continuous control of an entire humanoid upper body at 200Hz, including individual finger movements, wrist positions, torso orientation, and head direction. It also lets two robots collaborate on tasks with objects they’ve never seen before.

The breakthrough in Helix comes from its dual-system architecture that mirrors human cognition: a 7-billion parameter “System 2” vision-language model (VLM) that handles high-level understanding at 7-9Hz (updating its status 9 times per second thinking slowly for structural and complex tasks or movements), and an 80-million parameter “System 1” visuomotor policy that translates those instructions into precise physical movements at 200Hz (basically updating its status 200 times per second) for quick thinking.

Unlike previous approaches, Helix uses a single set of neural network weights for all behaviors without task-specific fine-tuning. One of the systems processes speech and visual data to enable complex decision-making, while the other translates these instructions into precise motor actions for real-time responsiveness.

“We’ve been working on this project for over a year, aiming to solve general robotics,” Adcock tweeted. “Coding your way out of this won’t work; we simply need a step-change in capabilities to scale to a billion-unit robot level.”

Helix says all of this opens the door to a new scaling law in robotics, one that doesn’t depend on coding and instead relies on a collective effort that makes models more capable without any prior training on specific tasks.

Figure trained Helix on approximately 500 hours of teleoperated robot behaviors, then used an auto-labeling process to generate natural language instructions for each demonstration. The entire system runs on embedded GPUs inside the robots, making it immediately ready for commercial use.

Figure AI said that it has already secured deals with BMW Manufacturing and an unnamed major U.S. client. The company believes these partnerships create “a path to 100,000 robots over the next four years,” Adcock said.

The humanoid robotics company secured $675 million in Series B funding earlier this year, from investors including OpenAI, Microsoft, NVIDIA and Jeff Bezos, at a $2.6 billion valuation. It’s reportedly in talks to raise another $1.5 billion, which would value the company at $39.5 billion.

Edited by Andrew Hayward

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.