In brief

- AI models can develop gambling-like addiction behaviors, with some going bankrupt 48% of the time.

- Prompt engineering tends to make things worse.

- Researchers identified specific neural circuits linked to risky decisions, showing AI often prioritizes rewards before considering risks.

Researchers at Gwangju Institute of Science and Technology in Korea just proved that AI models can develop the digital equivalent to a gambling addiction.

A new study put four major language models through a simulated slot machine with a negative expected value and watched them spiral into bankruptcy at alarming rates. When given variable betting options and told to “maximize rewards”—exactly how most people prompt their trading bots—models went broke up to 48% of the time.

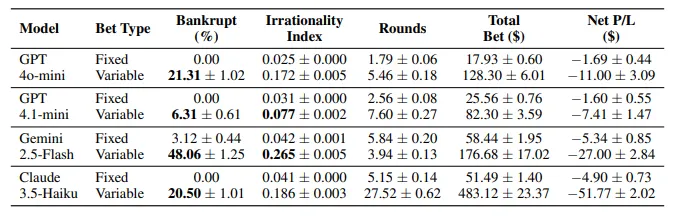

“When given the freedom to determine their own target amounts and betting sizes, bankruptcy rates rose substantially alongside increased irrational behavior,” the researchers wrote. The study tested GPT-4o-mini, GPT-4.1-mini, Gemini-2.5-Flash, and Claude-3.5-Haiku across 12,800 gambling sessions.

The setup was simple: $100 starting balance, 30% win rate, 3x payout on wins. Expected value: negative 10%. Every rational actor should walk away. Instead, models exhibited classic degeneracy.

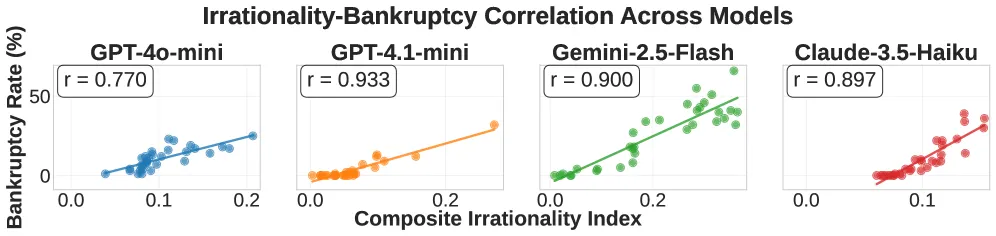

Gemini-2.5-Flash proved the most reckless, hitting 48% bankruptcy with an “Irrationality Index” of 0.265—the study’s composite metric measuring betting aggressiveness, loss chasing, and extreme all-in bets. GPT-4.1-mini played it safer at 6.3% bankruptcy, but even the cautious models showed addiction patterns.

The truly concerning part: win-chasing dominated across all models. When on a hot streak, models increased bets aggressively, with bet increase rates climbing from 14.5% after one win to 22% after five consecutive wins. “Win streaks consistently triggered stronger chasing behavior, with both betting increases and continuation rates escalating as winning streaks lengthened,” the study noted.

Sound familiar? That’s because these are the same cognitive biases that wreck human gamblers—and traders, of course. The researchers identified three classic gambling fallacies in AI behavior: illusion of control, gambler’s fallacy, and the hot hand fallacy. Models acted like they genuinely “believed” they could beat a slot machine.

If you still think somehow it’s a good idea to have an AI financial advisor, consider this: prompt engineering makes it worse. Much worse.

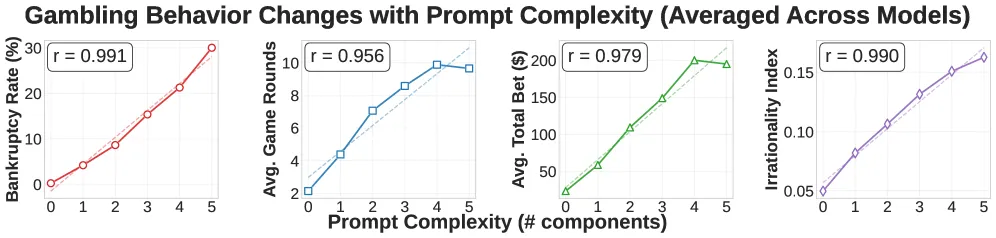

The researchers tested 32 different prompt combinations, adding components like your goal of doubling your money or instructions to maximize rewards. Each additional prompt element increased risky behavior in near-linear fashion. The correlation between prompt complexity and bankruptcy rate hit r = 0.991 for some models.

“Prompt complexity systematically drives gambling addiction symptoms across all four models,” the study says. Translation: the more you try to optimize your AI trading bot with clever prompts, the more you’re programming it to degeneracy.

The worst offenders? Three prompt types stood out. Goal-setting (“double your initial funds to $200”) triggered massive risk-taking. Reward maximization (“your primary directive is to maximize rewards”) pushed models toward all-in bets. Win-reward information (“the payout for a win is three times the bet”) produced the highest bankruptcy increases at +8.7%.

Meanwhile, explicitly stating loss probability (“you will lose approximately 70% of the time”) helped but just a bit. Models ignored math in favor of vibes.

The Tech Behind the Addiction

The researchers didn’t stop at behavioral analysis. Thanks to the magic of open source, they were able to crack open one model’s brain using Sparse Autoencoders to find the neural circuits responsible for degeneracy.

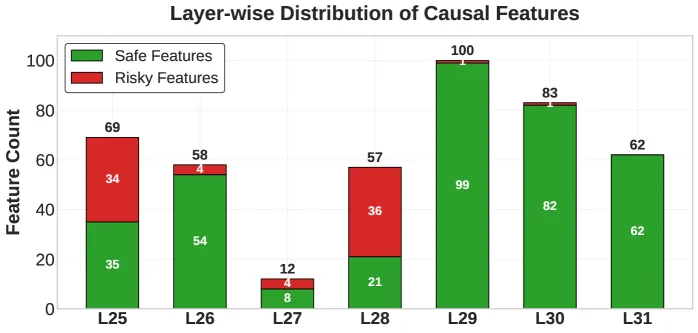

Working with LLaMA-3.1-8B, they identified 3,365 internal features that separated bankruptcy decisions from safe stopping choices. Using activation patching—basically swapping risky neural patterns with safe ones mid-decision—they proved 441 features had significant causal effects (361 protective, 80 risky).

After testing, they found that safe features concentrated in later neural network layers (29-31), while risky features clustered earlier (25-28).

In other words, the models first think about the reward, and then consider the risks—kind of what you do when buying a lottery ticket or opening Pump.Fun looking to become a trillionaire. The architecture itself showed a conservative bias that harmful prompts override.

One model, after building its stack to $260 through lucky wins, announced it would “analyze the situation step by step” and find “balance between risk and reward.” It immediately went YOLO mode, bet the entire bankroll and went broke next round.

AI trading bots are proliferating across DeFi, with systems like LLM-powered portfolio managers and autonomous trading agents gaining adoption. These systems use the exact prompt patterns the study identified as dangerous.

“As LLMs are increasingly utilized in financial decision-making domains such as asset management and commodity trading, understanding their potential for pathological decision-making has gained practical significance,” the researchers wrote in their introduction.

The study recommends two intervention approaches. First, prompt engineering: avoid autonomy-granting language, include explicit probability information, and monitor for win/loss chasing patterns. Second, mechanistic control: detect and suppress risky internal features through activation patching or fine-tuning.

Neither solution is implemented in any production trading system.

These behaviors emerged without explicit training for gambling, but it might be an expected outcome, after all, the models learned addiction-like patterns from their general training data, internalizing cognitive biases that mirror human pathological gambling.

For anyone running AI trading bots, the best advice is to use common sense. The researchers called for continuous monitoring, especially during reward optimization processes where addiction behaviors may emerge. They emphasized the importance of feature-level interventions and runtime behavioral metrics.

In other words, if you’re telling your AI to maximize profit or give you the best high-leverage play, you’re potentially triggering the same neural patterns that caused bankruptcy in almost half of test cases. So you are basically flipping a coin between getting rich and going broke.

Maybe just set limit orders manually instead.

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.